In my last post, I covered why I am so excited about MCP.

In this post, I will dive a little bit deeper into the details and building blocks of MCP, and how they could be used to build real-world AI applications.

I will also show two demos of MCP, so by the end of this post, I hope you’ll see what I see.

MCPs might be the catalyst for a rapid development and adoption of AI applications in the coming years.

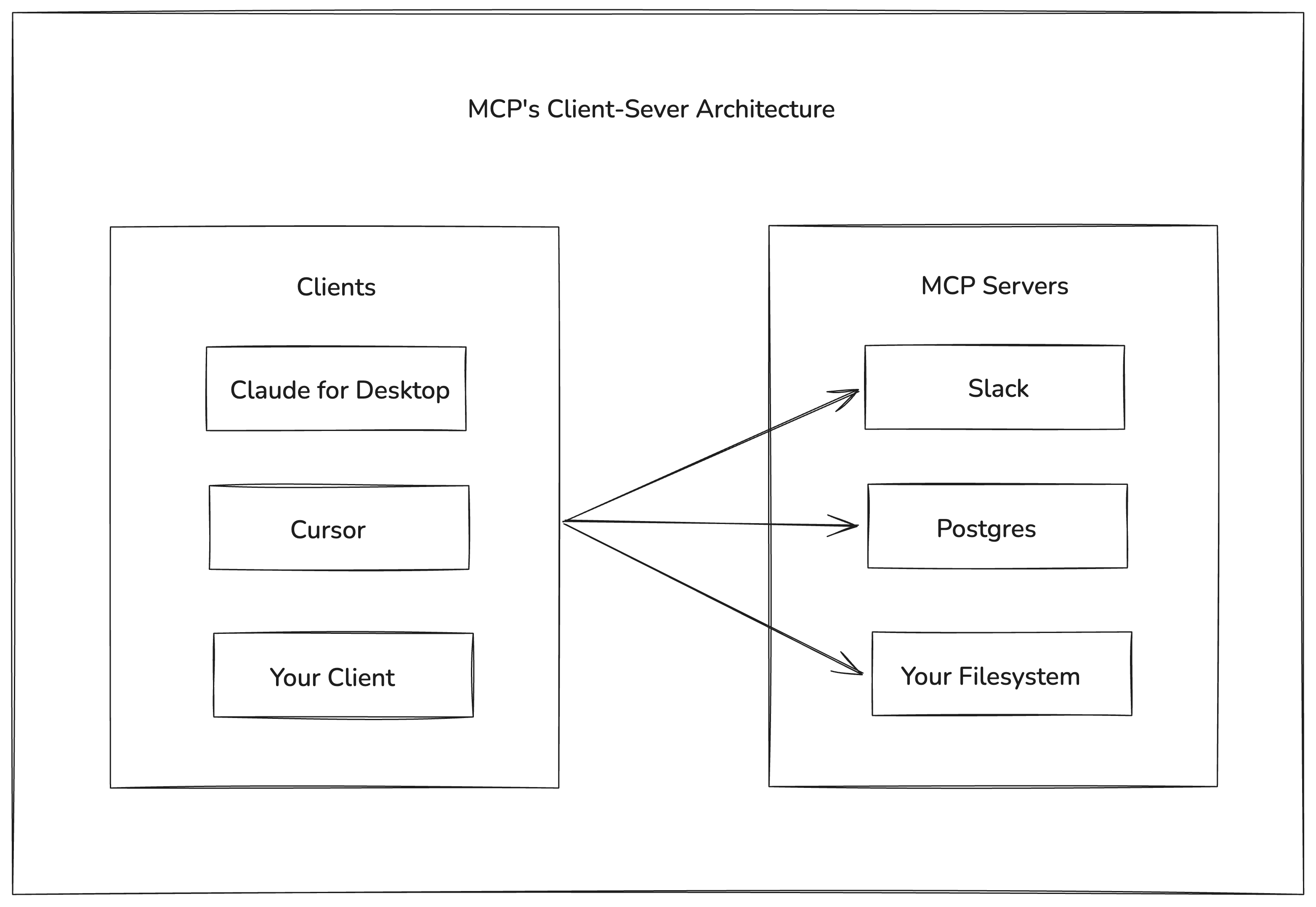

Client-Server Model

MCP defines a Client-Server model. A Server exposes certain features to the client, and Clients can query and invoke those features.

Moreover, a Client can connect to multiple MCP servers at once, allowing it access wide range of features at runtime.

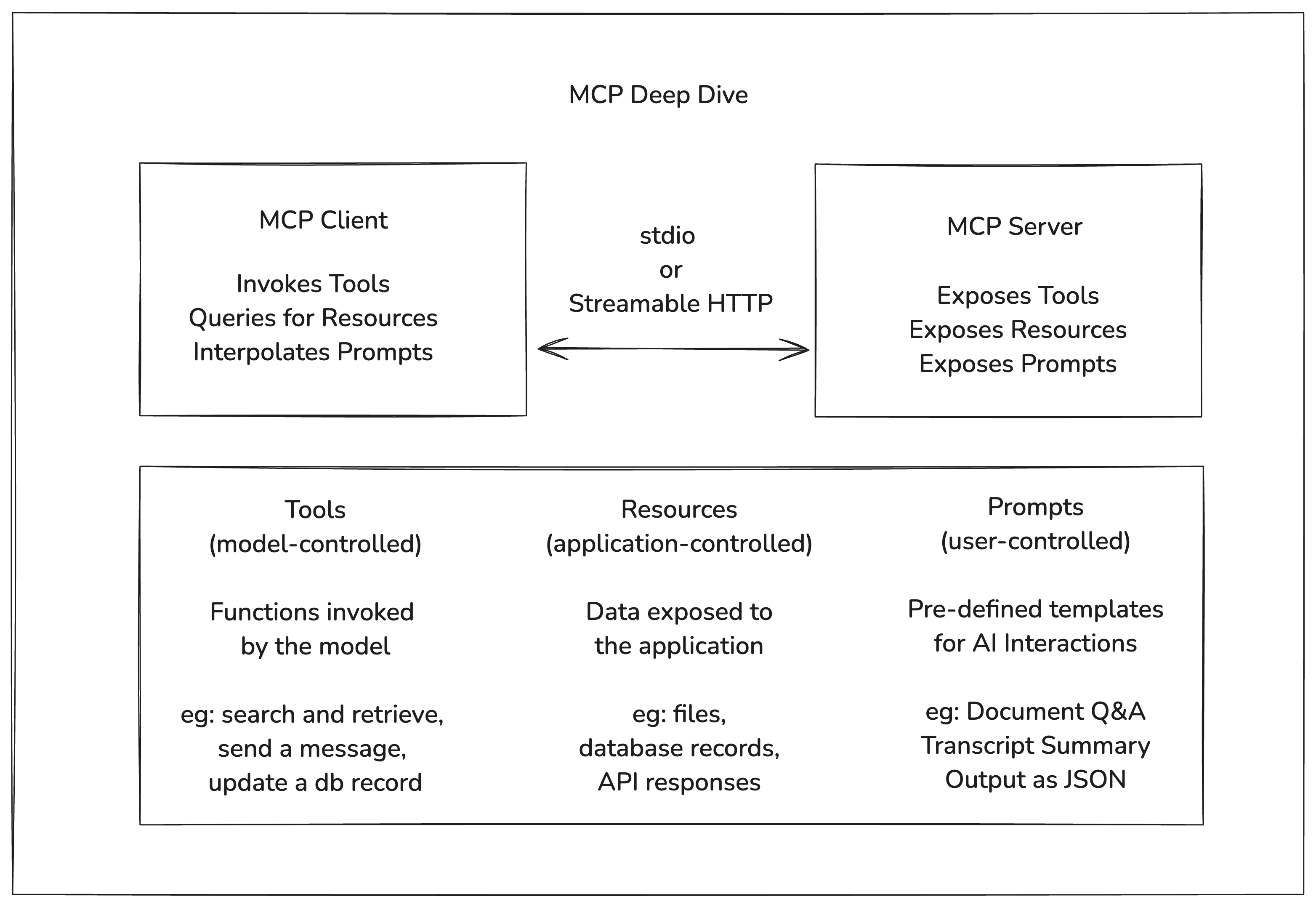

Transport Layer

A keen reader might notice that the local filesystem could be exposed as an MCP server, based on the diagram above. That’s because the current MCP spec allows for locally hosted MCP servers as well as remote MCP servers.

- Clients can connect to local MCPs by spawning a sub-process and interacting with it over stdio.

- Clients can connect to remote MCPs using Streamable HTTP or the now-deprecated HTTP+SSE connections.

Server Features

Servers offer the following fundamental building blocks for adding context to the language models via MCP. These primitives enable rich interaction between clients, servers, and language models.

- Tools: Functions exposed to the LLM to take actions; This is controlled by the Model.

- Resources: Contextual data attached and managed by the client; This is controlled by the Application.

- Prompts: Interactive templates invoked by user choice; This is controlled by the User.

Let’s see it in Action

Now that we have familiarized ourselves with the foundational building blocks of MCP. Let’s set it up on Claude and see it in action.

For these demos, I will focus on pre-built MCP Serves, and avoid writing any new code. That’s because:

- In the ideal world, you will have all the capabilities you need exposed through MCP Servers, and you seldom have to write code.

- It will be most useful to understand how MCPs work from a user’s standpoint, before we start building our own MCP servers.

I am planning to do two demos.

- First demo will focus on tool use, and how multiple MCPs can be used together to build richer applications.

- Second demo will focus on using all the 3 fundamental building blocks of an MCP server i.e. Prompts, Resources, and Tools

Prerequisites

- Install Claude for Desktop

- I use MacOS, but instructions should very similar to Windows, and Linux. Make sure to make necessary adaptations.

- You can find MCP Settings file from

Settings>Developer>Edit Config. Use your favorite code editor to edit that file.

- Install Docker

- Most MCP Servers are written in Python, and Node, which can directly be run from host OS. But if you have a

nodejsorpythonversion mismatch, you may start running into issues. So it’s better to use Docker so that we don’t run into those issues.

- Most MCP Servers are written in Python, and Node, which can directly be run from host OS. But if you have a

Demo #1: Focused Web Search and Retrieval

Problem Statement: In this demo, we will do something very meta. We will build an AI Agent, that accesses the latest (2025-03-26) MCP Specification hosted at modelcontextprotocol.io and answer questions related to MCP Specification.

MCP Servers: We will need two MCP servers to implement this Agent.

- One MCP server, that can do web search, and return results. We will use the official

brave-searchMCP Server for this.- You will need

BRAVE_API_KEY. You can create one for free by signing up here.

- You will need

- And another MCP server that scrapes / fetches the content for the web pages returned in the web search. We will use the official

FetchMCP Server for this.

Update Claude Config:

{

"mcpServers": {

"brave-search": {

"command": "docker",

"args": [

"run",

"-i",

"--rm",

"-e",

"BRAVE_API_KEY",

"mcp/brave-search"

],

"env": {

"BRAVE_API_KEY": "<BRAVE_API_KEY>"

}

},

"fetch": {

"command": "docker",

"args": ["run", "-i", "--rm", "mcp/fetch"]

}

}

}

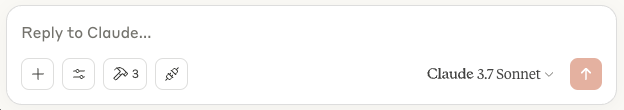

Save the config and restart Claude. You should see 3 new tools available on Claude search bar.

Note: First time, it might take a while for this to show up because docker will take some time to fetch the images, and start the MCP servers.

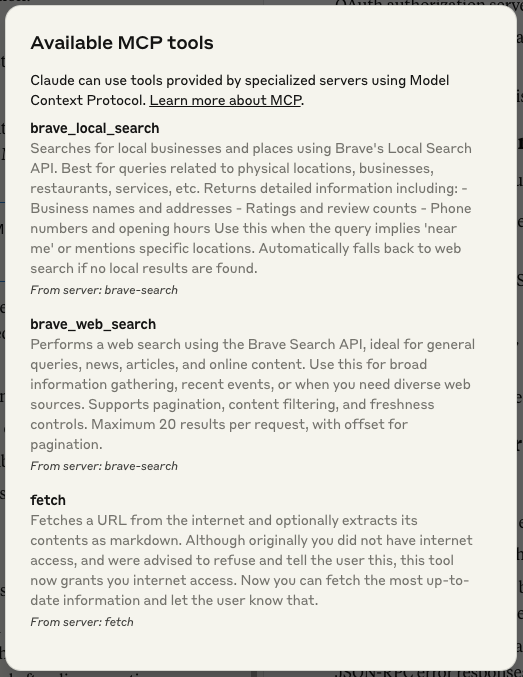

If you click on the tools Icon, you should be able to see tools that are exposed by the two MCP servers we just added.

You might notice that each tool has very rich and descriptive documentation. This is quite helpful because the LLM will need this to learn how to use this tool properly.

And that’s it. You are all set.

You can now ask your question to the Claude. Claude can decide if it needs to use the tool to answer your question. I usually as very directed question to ensure that it invokes the tools properly.

Example:

fetch all web links available about search query "session management in MCP"

on site:https://modelcontextprotocol.io/specification/2025-03-26/ using

brave search and fetch the web link content using the fetch tool.

finally create a summary of how session management works in MCP

Here is a recording of it.

First time when I saw this in Action, I was jumping with joy like Ted Lasso. 😅

Well, this is nice. But writing a very curated prompt to invoke the correct tools is kind of tedious, and that’s where the other building blocks come into play.

For instance, in the above application, the templated prompt could have been provided by the MCP server as a “Prompt”. And the generated artifact could be stored as a “Resource”.

In the next demo, we will look into all the three building blocks of a MCP Server, and how they could be used to build richer AI Applications.

Demo #2: Prompts, Resource, and Tools

Now that we have a basic understanding of how to use MCP servers in Claude for Desktop. We will look like other feature types exposed by an MCP server. We will look into Prompts and Resources.

While “Tools” are controlled by the LLM. “Prompts” are controlled by the user, and “Resources” are controlled by the Application.

I found a reference server that demonstrates all three of these features resonably well. We will be using the official sqlite server implementation for this demo.

Update Claude Config:

{

"mcpServers": {

"sqlite": {

"command": "docker",

"args": [

"run",

"--rm",

"-i",

"-v",

"mcp-test:/mcp",

"mcp/sqlite",

"--db-path",

"/mcp/test.db"

]

}

}

}

Note that we are removing Brave and Fetch MCP Servers from the previous demo, and adding the Sqlite MCP Server.

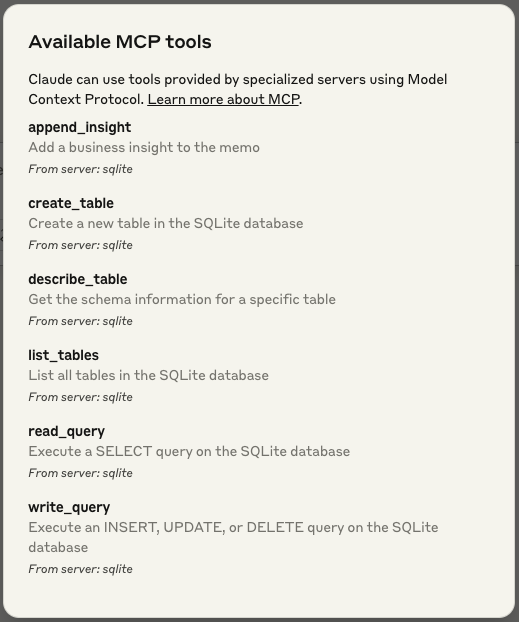

Just like Demo #1, you’ll notice new tools appear under the tools icon.

This MCP exposed 6 tools. Most of these tools provide meaninful access to the underlying SQLite database.

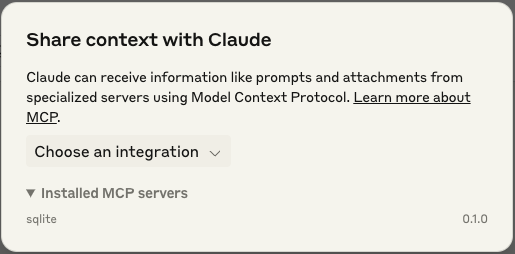

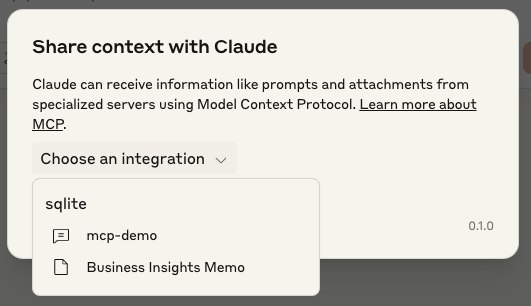

Now, if you click on the “Attach MCP” icon next to the tools icon on the search bar. You will see the Share Context Dialog.

Here you will find the available “Prompt"s, and “Resource"s exposed by the MCP server.

- “mcp-demo” is the “Prompt”.

- and, “Business Insights Memo” is the “Resource”.

The way this MCP is setup, you can use the “prompt” feature create a templated prompt for the Model. The interpolated prompt will have all the instructions baked in to help Claude understand how to invoke the tools to access the underlying Sqlite database, and how to create the resource.

After the prompt execution is complete, you should have the Resource “Business Insights Memo” populated.

Let’s see it in Action. 🙂

And that’s a wrap!

I hope you see, what I see. MCP has the potential to accelerate AI Application development and adoption faster than we have seen before. 🚀

Further Reading

Want to build an MCP Server with 17 Lines of Python: Let’s write a Simple MCP Server